As AI-powered tools become commonplace in college coursework, the question of whether these technologies enhance students’ learning or encourage shortcuts has become increasingly urgent. New research was conducted on behalf of Northern Kentucky University (NKU) to explore perspectives from both educators and recent college graduates on this and other issues.

A questionnaire gathered responses from 804 recent graduates and 200 college professors nationwide to understand how AI is transforming the academic experience. The study provides practical insights for students, educators and institutions seeking to utilize AI as a learning tool while maintaining fairness, integrity and meaningful skill development.

Key Takeaways

- 33% of professors said their workload has increased since AI tools became common.

- Only 27% of professors said they feel very or extremely confident in detecting AI-generated student work.

- 71% of recent graduates said they used AI to help complete their coursework.

- Nearly one in 10 recent graduates said they don’t think they would have graduated without using AI tools.

- 65% of graduates said that using AI in college better prepared them for the workplace.

How Professors Define the Line Between AI Help and Cheating

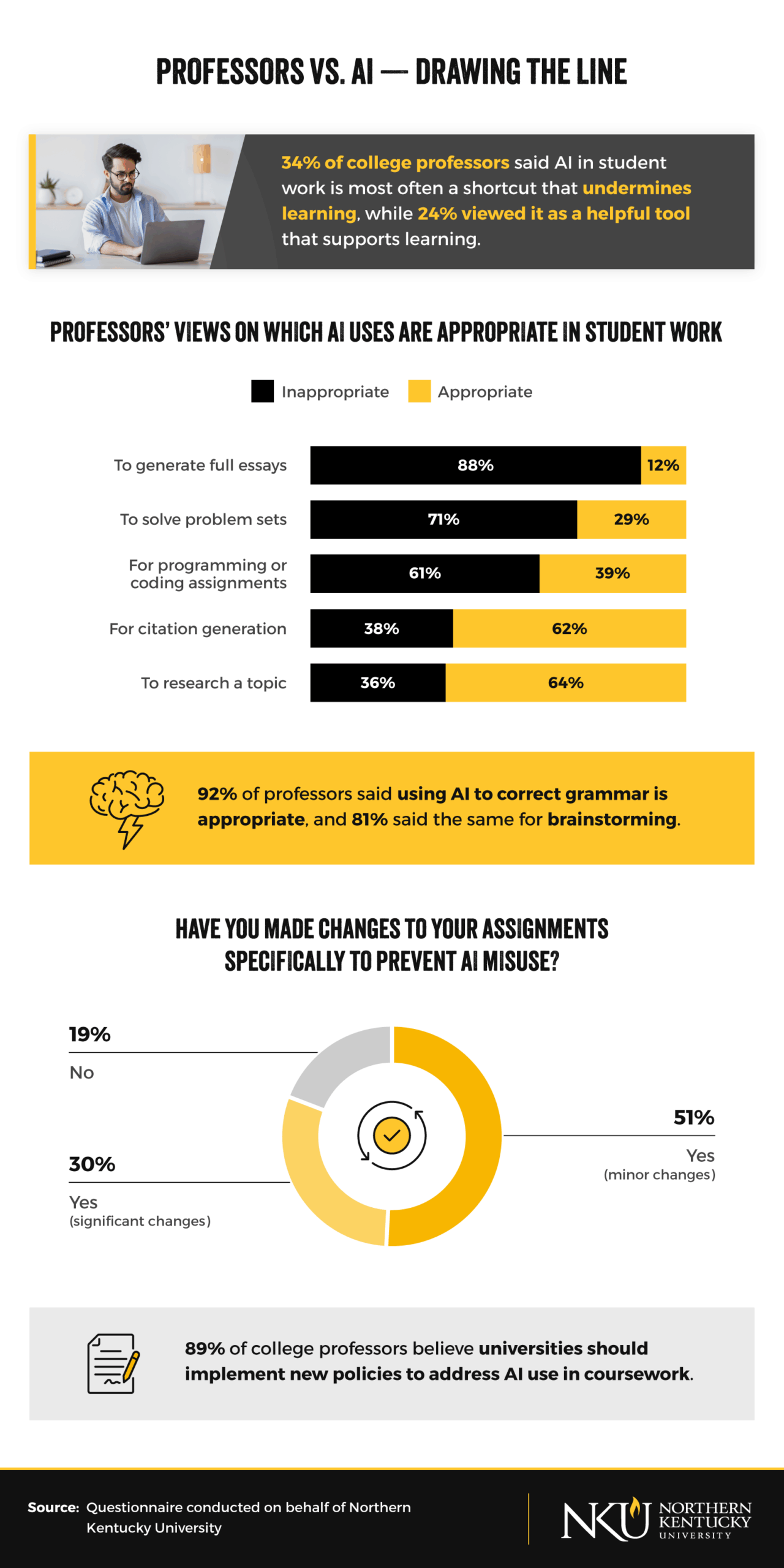

Educators across the country are determining where to draw the line between acceptable AI use and academic dishonesty. While many professors recognize AI’s potential to enhance learning, they also see an urgent need for updated academic policies to guide its use among students.

A majority of professors (80%) said not all uses of AI in student work should be considered cheating, indicating a significant shift in academic culture. About three-quarters (76%) believed that AI, when applied thoughtfully, could strengthen student learning outcomes by enhancing creativity and comprehension.

Professors said the most appropriate uses of AI include correcting grammar (92%), brainstorming ideas (83%) and researching a topic (64%). By contrast, most considered generating full essays (88%), solving problem sets (71%) and completing programming or coding work (61%) to be inappropriate uses of the software. To prevent AI misuse, 81% of professors reported making minor to significant changes to their assignments.

Many professors also agreed that clear institutional standards are needed. Nearly nine in 10 (89%) said universities should implement new policies addressing the role of AI in coursework. The findings suggest that while AI use is not always unethical, its responsible use in higher education depends on transparency, structure and leadership from academic institutions.

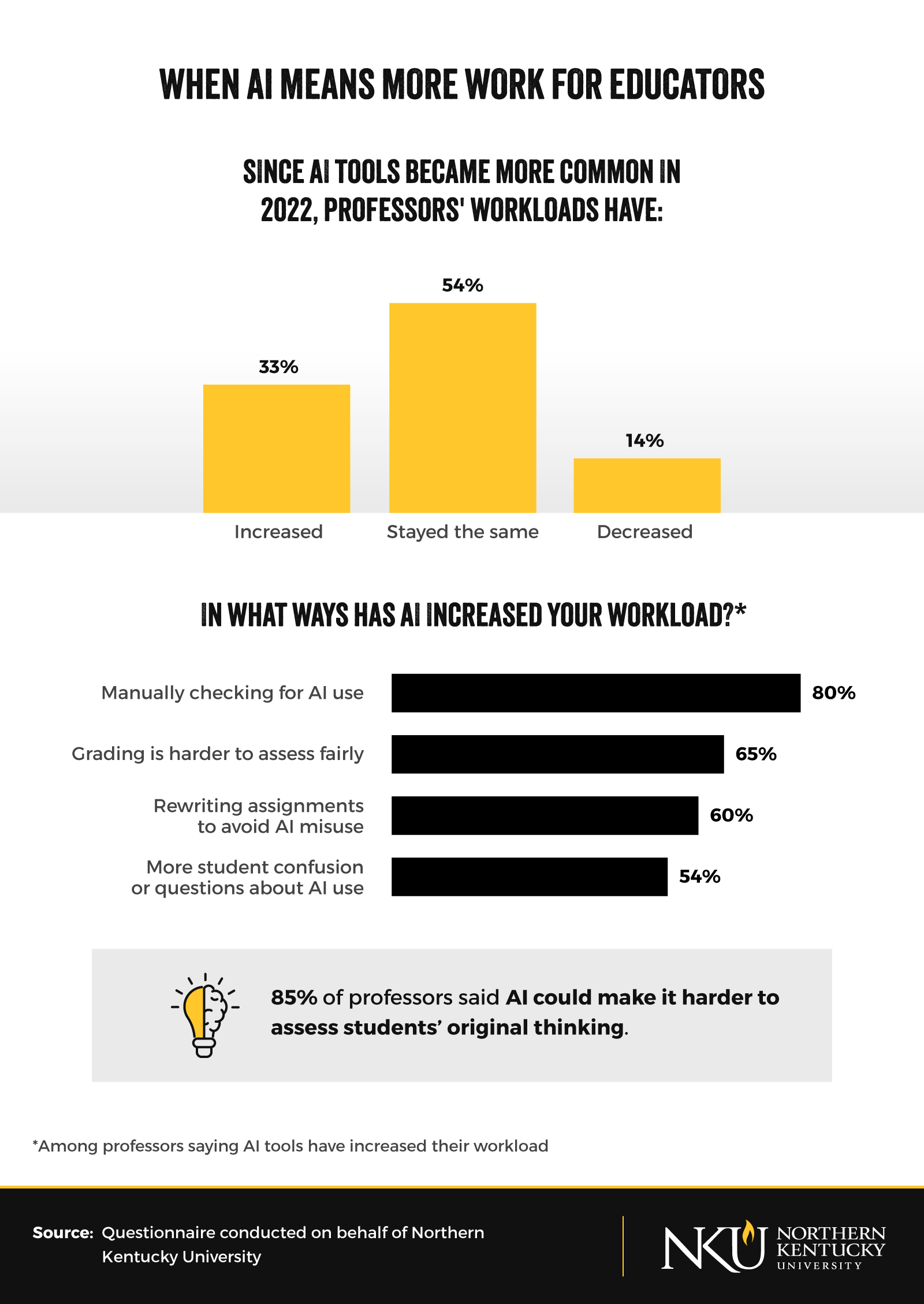

AI Has Added to Professors’ Workloads

While AI tools can simplify some aspects of teaching, they have also introduced new challenges. Many professors are now expected to distinguish between authentic and AI-generated work, a task complicated by inconsistent detection tools and unclear institutional guidelines.

More than half of professors (54%) reported no change in their workloads since the widespread adoption of AI tools, while 14% said theirs had decreased. However, one in three professors experienced an increase, most often due to the need to manually check for AI-generated content (80%). Only 27% of professors said they felt very or extremely confident in identifying AI-generated work.

Only 29% believed plagiarism-detection tools accurately recognize AI-generated material, while 39% viewed them as ineffective and 32% were unsure. As professors navigate these challenges, students are also shaping their own opinions about AI’s place in academic work.

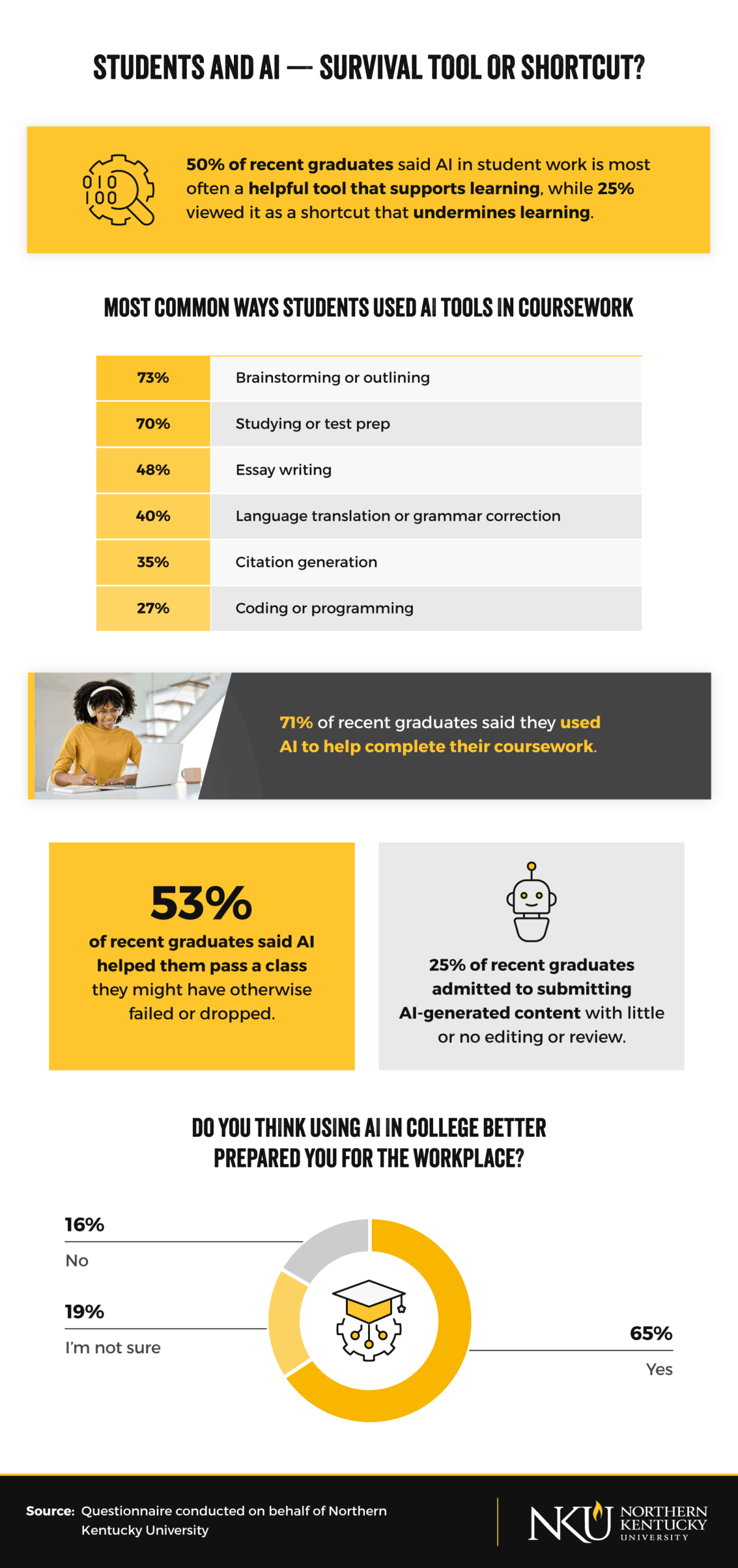

Students See AI as a Study Partner, Not a Shortcut

For recent graduates, AI has become less of a cheat code and more of a collaborator in achieving academic and professional goals. Many view it as an essential support system that enhances productivity and skill development.

Recent college graduates said that using AI gave them an advantage in coursework (82%) and time management (70%). More than half also credited AI tools with improving their communication skills (55%) and job interview readiness (55%).

Students most often used AI for brainstorming and outlining assignments (73%) or for studying and test preparation (70%). Notably, 71% admitted to using AI for coursework even when unsure whether it was permitted, emphasizing the need for clearer institutional guidance.

Over half of recent graduates (53%) said AI helped them pass a class they might have otherwise failed or dropped. Nearly one in 10 graduates said they might not have completed their degree without AI tools, and 65% agreed that using AI in college better prepared them for the workplace. For many, these tools bridged the gap between learning theory and real-world application.

A Practical Guide to Using AI Responsibly in Higher Education

As AI tools become integral to academic life, universities, professors and students each play a critical role in shaping responsible use. This guide offers actionable steps for integrating AI in ways that enhance learning, preserve academic integrity and foster digital literacy.

For Universities

- Establish clear, campus-wide policies: Define acceptable and unacceptable uses of AI across disciplines and ensure students and faculty receive consistent guidance.

- Invest in training and resources: Offer regular workshops on AI ethics, detection tools and best practices to help faculty and students stay informed.

- Promote transparency and communication: Require students to disclose when and how AI tools are used in coursework to encourage accountability.

- Adopt human-in-the-loop practices: Maintain human oversight in grading and assessment to safeguard fairness and uphold educational standards.

- Evaluate and update policies regularly: Revisit AI policies each academic year to reflect new technologies, evolving student behavior and faculty feedback.

For Professors

- Set clear expectations for assignments: Clearly define when AI assistance is permitted (e.g., brainstorming or grammar correction) and when it is not (e.g., full essay generation).

- Model responsible use: Demonstrate how AI can complement critical thinking and creativity rather than replace them.

- Monitor for signs of overreliance: Watch for shifts in writing style, tone or accuracy that might indicate inappropriate AI use.

- Balance innovation with integrity: Incorporate AI-related assignments that emphasize understanding and analysis, not automation.

- Encourage open dialogue: Create space for students to ask questions about AI use without fear of penalty, fostering a culture of learning over punishment.

For Students

- Use AI as a learning aid, not a shortcut: Apply AI tools to brainstorm, organize thoughts or check for accuracy, never to complete full assignments.

- Always disclose AI use: Be transparent about when and how AI contributed to your work to maintain academic honesty.

- Retain human judgment: Verify AI-generated information and ensure final work reflects your own understanding and perspective.

- Develop digital literacy: Learn how AI systems work, including their biases and limitations, to use them more responsibly.

- Seek clarification early: Ask instructors about acceptable AI use for each course to avoid unintentional misuse.

Building a Culture of Responsible AI Use

AI is already a part of the classroom, and it is here to stay. The challenge now is helping students use it to think more critically and creatively. When professors set clear expectations and model responsible use, students are more likely to do the same. Building that shared understanding takes time, but it transforms AI from a shortcut into a valuable learning partner.

Real progress happens when faculty, administrators and students work together to build a culture that values curiosity, honesty and growth. When used with intention and guidance, AI can strengthen learning, inspire confidence and prepare students to thrive in a technology-driven world.

Methodology

A questionnaire was administered to 804 recent college graduates and 200 college professors on behalf of Northern Kentucky University to explore sentiments surrounding whether AI is an academic ally or a shortcut for cheating. Recent graduates confirmed that they had graduated within the past 12 months. Data was collected in October 2025. This is a non-scientific, exploratory questionnaire and is not intended to represent all college students and professors.

About Northern Kentucky University

NKU’s online Doctor of Education (Ed.D.) in Educational Leadership – Higher Education Administration program prepares educators to lead and innovate in postsecondary settings. This CAEP-accredited program combines theory with practical experience, offering courses on student development, higher education history and emerging trends. Guided by experienced practitioners, students gain the insight and leadership skills needed to drive positive change in colleges, universities and educational organizations.

Fair Use Statement

Information in this article may be used for noncommercial purposes only. When sharing or referencing this content, please provide proper attribution and a link back to NKU.